Everything in Moderation: a COVID-19 Content Policy Study

A huge young Vogon guard stepped forward and yanked them out of their straps with his huge blubbery arms. “You can’t throw us into space,” yelled Ford, “we’re trying to write a book.”

“Resistance is useless!” shouted the Vogon guard back at him. It was the first phrase he’d learnt when he joined the Vogon Guard Corps.

The Hitchhiker’s Guide to the Galaxy (1979)

At the moment when the world of globalized movement stopped in March of 2020, and both imaginable and unimaginable activities shifted online, there was an underlying fear: will the Internet take it? The answer depends how one looks at the question. The technical infrastructure didn’t fall to pieces, several services experienced interruptions and crashes while some platforms such as Zoom monopolized the market. But the Internet also implies people conducting services, both visible and invisible ones, as well as dramatic changes in working (or firing) and living conditions for many who represent the human glue within this infrastructure.

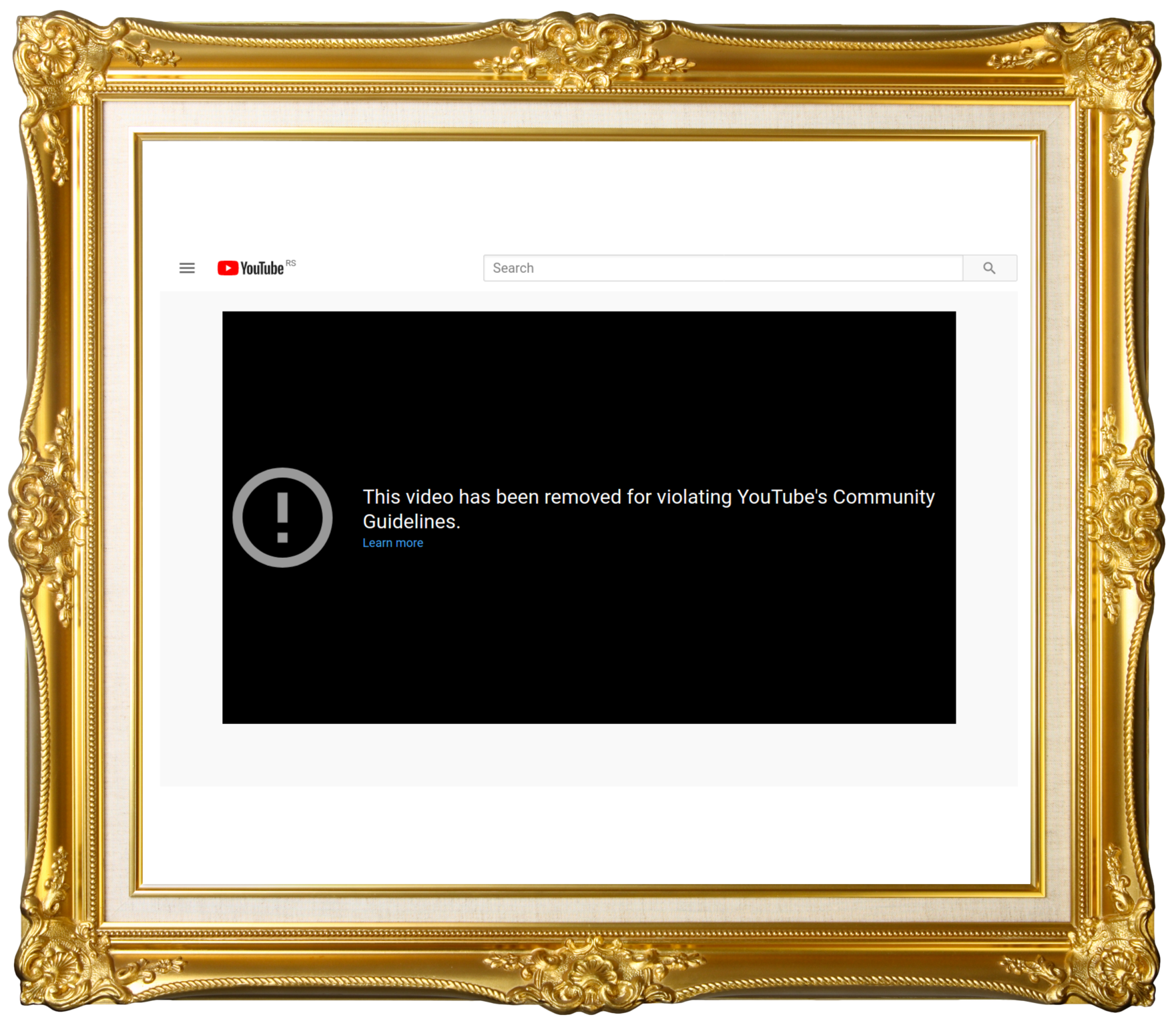

Due to the regulations regarding social distancing, Facebook and YouTube sent many of their content moderators home, relying on automated software instead, as their security policy doesn’t allow work from home. The result of this has been the removal of problematic, as well as legitimate content, in a wave of algorithmic protection of Internet users. Conspiracy theories, politician tweets, Crypto content, news and science articles have all of a sudden equally disappeared, blending together in the algorithmic realm of data overpolicing. What this builds is a system in which the most important binary oppositions have become: approved and disapproved, up to the point where every content online would need to fall into one of the two categories. At the same time, many people are being detained around the globe by authoritarian governments, from journalists reporting on hospital conditions, to people posting on social media.

Portrait of a censored tweet by Venezuela’s President Nicolás Maduro endorsing a natural remedy that could “eliminate the infectious genes” of the COVID-19 virus

With fear, physical and digital repressive measures on the rise, how are different services approaching this moment of social vulnerability? How will content policy and the treatment of personal data look like after the crisis has past? How will the current constrained physical and communication space affect future places and modes of expression? How far will automated tools be given the space to decide on subtle social contexts? And when will the effect on the many humans affected be considered?

Fake news, misinformation and the media pandemic around the COVID-19 crisis appeared in a moment of rising restrictions in content policy within social media and other online platforms. It appears as though the pandemic arrived at a perfect moment to test a real world scenario of the content moderation performance of algorithms. Facebook is motivating users to take information only from the WHO, while in parallel asking its users for health data, for research purposes. YouTube released a report at the end of August 2020, providing insights into their Community Guidelines Enforcement, where every aspect of the report is supported by data, as a legitimizing factor that can be provided instead of explaining what this data is showing. In their Responsible Policy Enforcement blog post they acknowledged the act of overpolicing, as a choice between potential under-enforcement or potential over-enforcement of content removal. In a way, these actions are a testament to Flusser’s writing on apparatuses “functioning as an end in themselves, automatically as it were, with the single aim of maintaining and improving themselves”. In the words of YouTube (owned now by Google) “Human review is not only necessary to train our machine learning systems, it also serves as a check, providing feedback that improves the accuracy of our systems over time.“ What this means is that they put higher priority on the accuracy and development of their systems than the issue of content, which they openly say in this report. Additionally, in their report, they offer YouTube viewers to help in flagging content for free – the work that would otherwise be done badly by algorithms, and only at this point are viewers referred to as a community. Not only do the services improve by community drive content, but also from community driven, in other words free, content verification. The period of COVID and the unprecedented shift of all communication to online services is also the moment when GPT-3 surfaced, as the ultimate embodiment of machine learning optimization, hand in hand with growing textual data online.

Portrait of a censored theory that the design of the new 20 pound bill has a symbol of COVID-19 and a 5g tower

But there is another obscured question at hand here. What happened to the many people who were conducting the excruciating job of watching hours of disturbing content, developing PTSD while doing the work, as recently confirmed via a lawsuit against Facebook? Much has been written about the automatic content removal, but not a whole lot about the people who had been employed, most commonly from an outsourced agency, to do that work previously. Did they relocate to other services? Did they get fired? How does this add up to their, already vulnerable, problematic and insufficiently researched an unprotected working conditions? Who is accountable for their wellbeing in light of the type of work that they do?

YouTube was recently sued by a former content policy moderator, who stated that their“wellness coaches”, people who are not licensed to provide professional medical support, were not available for people who worked evening shifts. The lawsuit alleges that moderators had to pay for their own medical treatment when it was needed. From the lawsuit we are also able to find out that moderators need to review 100 to 300 pieces of video content each day with an “error rate” of 2% to 5%. This number alone is sufficient to understand that psychological effects of this exposure would be a requirement before allowing the type of work to take place even.

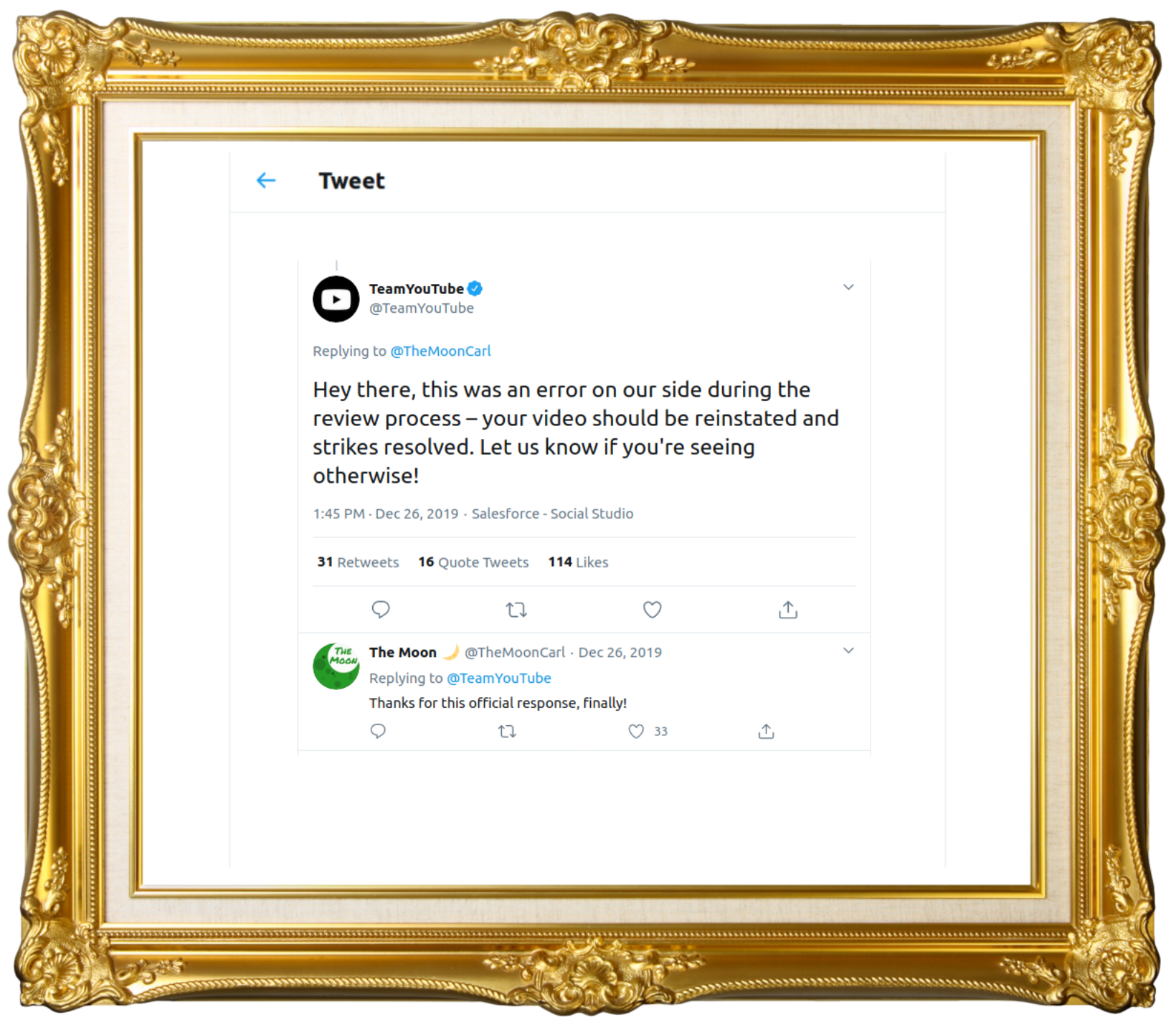

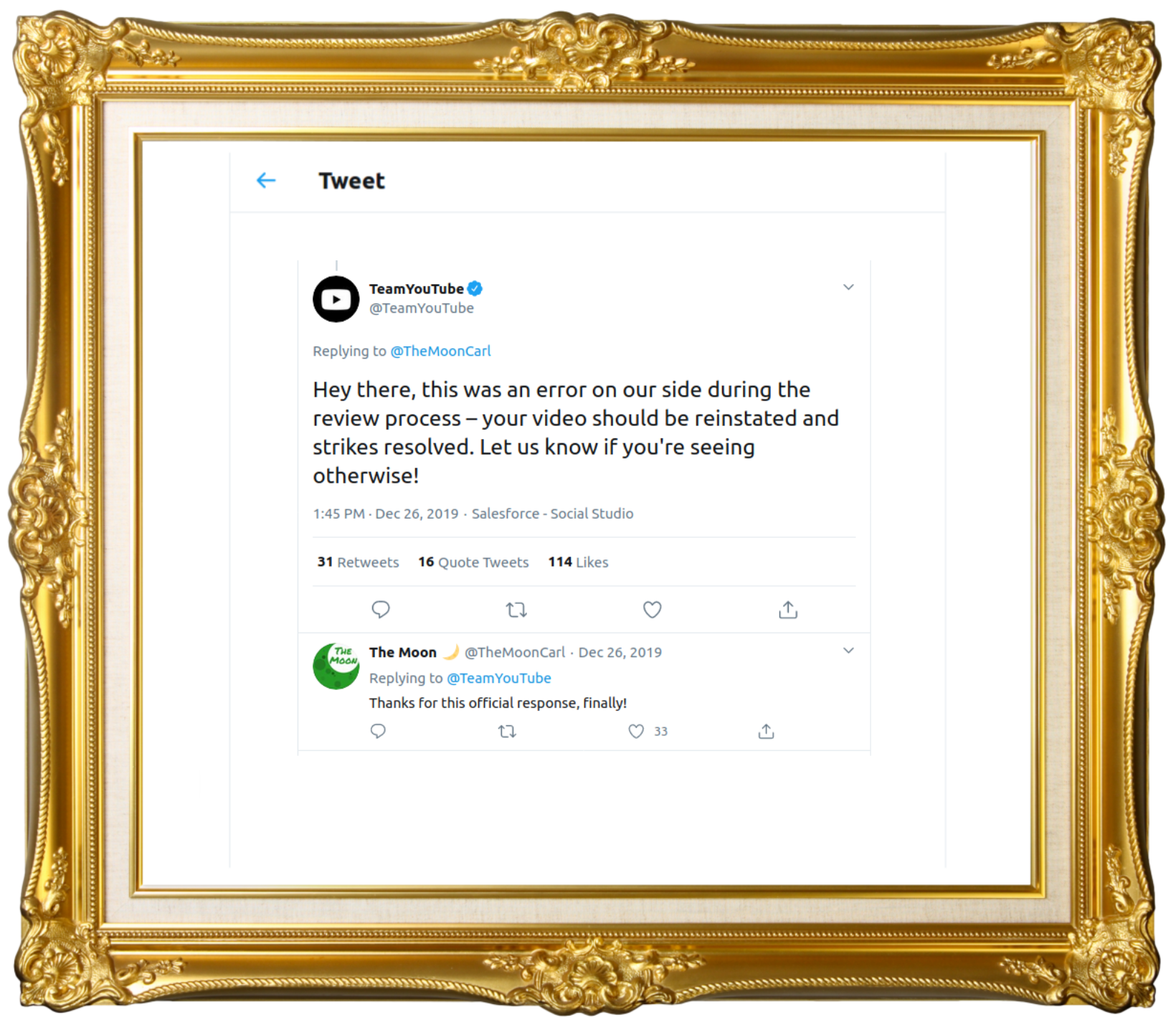

Portrait of an admitted mistake in content removal

Faced with consumer and, more importantly, advertiser dissatisfaction, YouTube slowly creates a full circle of absurdity for clear reasons of efficiency and economic interest by returning to human content moderation from the end of September 2020. This alone is intriguing, considering the growing concern about the type of the work done by content moderators, the growing lawsuits against these companies, as well as their problematic positioning in terms of outsourcing the contracts, as well as the terms of work of content moderators, to other companies. Together with this return of the necessity for human evaluation, it also announces another algorithmic assistive technology – that of fact checking. Considering the last few months and the results of algorithmic decisionmaking, one is left with the question: in which way can algorithmic fact checking be more sophisticated than the algorithmic removal of content? What is especially intriguing in these statements is the insistence of facts and reliability of technology to recognize them in a post-truth world where even humans are debating the verifyability of data sources?

As a portrait of the current moment in time, what we are left with is the message of pervasive and total censorship of what is allowed to be visible, both in terms of underlying mechanisms, working conditions and the actual content posted on platforms. It is polarization into true and false, appropriate and not appropriate, in which these services act in the position of social and ethical arbiter, flattening ontology at the same time, towards a glossy, two-dimensional image. But an absurd one, almost like Vogon poetry, or the conspiracy theories that flourish in repressive conditions. To come back to Douglas Adams, resistance is never useless, it is a basic necessity.

Portrait of a Times of Israel post treated as spam