On the gaydar: A 100-year challenge for facial recognition

Have you seen the recent 10-year challenge on social media - people posting their personal photos from 2008 and 2018 side by side? Our collective data consciousness increased after Cambridge Analytica, so the probable purpose of this meme was quickly recognised by some: free and, more importantly, verified data for facial recognition for ageing.

‘AI can tell you anything about anyone with enough data.’ I came across this quotation from Brian Brackeen, CEO of a face recognition company, in a Guardian article about a study on artificial intelligence (AI) from Stanford University which uses facial recognition to determine sexuality. The title of the study ‘Deep neural networks are more accurate than humans at detecting sexual orientation from facial images’ also makes a claim about the potential of AI. But can AI really do what the article says it can? And should AI be used to determine just about anything about just about anyone? Where does the future of personal data lie and what does digital policy have to say about it?

Let’s unpack some of the assumptions behind facial recognition AI based on the example in this study. Let’s highlight the specific issues with applications of such predictive AI software, zooming in on historical and current social implications for a data-driven world.

The binary trap of the AI ‘gaydar’

By relying on prenatal hormone theory and its implication for the connection between facial traits and hormonal influences on sexuality, the study reveals the presumption that sexuality is not a personal choice, but is bound by biology. If that relationship is understood as a given, the ramifications are far from subtle for further types of correlations based on facial recognition (racial, gender, and political discrimination just for starters).

To list a few of the issues at hand, prenatal theory relies on homogeneous datasets of individuals coming largely from one country. A physical ‘biological’ feature can have a different purpose, depending on the culture the individual is from. Sexuality, let alone gender, is also contextually different from culture to culture. Both are now largely accepted to be fluid, stemming from the research done by Alfred Kinsey in the 1950s. And speaking of fluid, the ‘gaydar’ study referenced in the Guardian was created based on determining heterosexual and homosexual preferences; it doesn’t cover transgender and bisexual preferences. This limited spectrum of sexuality is questionable: How valid is the sample used? Does the approach used in the 'gaydar' study to define sexuality reduce our understanding of both gender and sexual preference as binary and naturally given?

More generally, though, this example opens the broader issue of relying on AI algorithms on several levels. The first, most direct level is due to the specificities of the scope of the datasets algorithms are trained on, coupled with the lack of information on (or time devoted to understanding) what exact hypothesis the algorithm is based on. In the case of the AI gaydar, further research, including one by two AI specialists working at Google, has analysed the basis of the paper’s claim. Results show that most of the differentiating characteristics between perceived heterosexual facial characteristics and homosexual facial characteristics are those attributed to presentation (use of make-up, hairstyle, tan) rather than to those people are born with. And the datasets are not standardised. Image sources, mainly people’s dating and Facebook profile pictures, differed in quality of image, light, and angle of framing, for example.

Your face looks familiar!

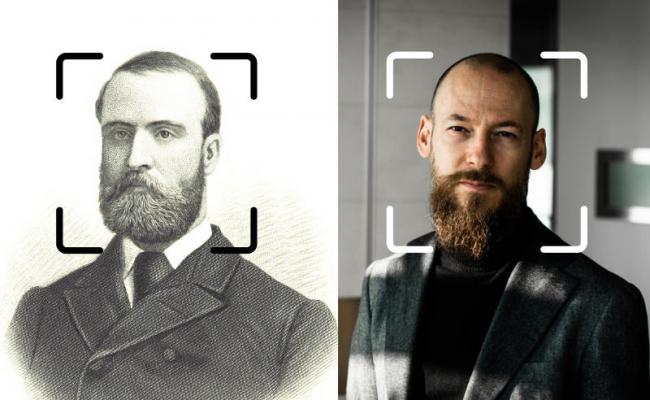

Sexual minorities as a vulnerable social group are not accepted in many countries. Software such as this could be manipulated to hunt down non-heterosexual individuals. Such a practice shouldn’t be allowed. One of the principles of the Internet, outlined in many documents such as the WSIS Declaration of Principles or the EU Commission Compact for the Internet, is the explicit protection of vulnerable groups, meaning there should be procedures preventing a scenario like this from happening.The same applies to other types of vulnerable groups, as they are the ones targeted the most by social prejudice and discrimination in society. Physiognomy, the practice of determining human traits from physical characteristics, has a long history, from differentiating types of personalities, to differentiating sanity from madness, to criminology, to ethnic categorisation. What the history of this system has shown is that once the data started being measured, the system revealed itself as extremely speculative and has been dismissed as having no scientific value. Not only is it speculative, it has proven to be one of the most dangerous historical ideologies, the basis for scientific racism in the nineteenth century, closely related to the establishment of the Eugenics movement and the rise of Nazism.

The danger of using facial recognition has been recognised (pun intended) by the authors of the gaydar paper, who note that the misuse of targeting someone’s sexual preference could have grave consequences for the privacy of individuals and our society as a whole. Coupled with other biometric and personal data, facial recognition can easily be used for political purposes against individuals, expanding existing facial recognition policing systems. But will this fascination with a new technology and the possibilities of measuring blind us? Will we repeat the same mistakes we made with old technologies and revoke social understanding and prejudice?

We shape our tools and, thereafter, our tools shape us - John Culkin (1967)

Machine learning works by creating patterns. Yet the variables that build a pattern are often assumed to be understood. Say, researchers feed an algorithm with a lot of data and label it; for example, photographs of heterosexual people. Then, when it is given new data, the algorithm takes the data it is fed with and says, oh this is similar to that existing labelled group of data. But the algorithm’s reasoning can be something completely different from what the researchers claim. They might argue that a factor in determining heterosexual vs homosexual is the size of the jaw, but the algorithm actually finds a pattern in the angle of taking the portrait photo (angle from below).

While an algorithm can be developed to deal accurately with cases applicable to the existing datasets it’s trained on, it’s not equipped to deal with context not covered by those datasets. What happens to the data which doesn’t fit, which isn’t easily classified?

Furthermore, machine learning algorithms learn from the specific datasets they are fed. If these datasets are biased, corrupted, or flawed in any way, they directly build a distorted reality in the software produced. And while machine intelligence can be effective in many cases, it can also be problematic, if applied without human evaluation. The process of evaluation is further tampered by ‘automation bias’, i.e., the default trust we place in an automated system rather than in our own observations. This has been demonstrated in several time-critical environments.

With all of this taken into account, the gaydar case could be nominated for a Failed Machine Learning award, qualifying by its assertion of a simplistic and binary idea of gender and sexuality, and by its assumption of the efficiency of the algorithm. Facial recognition software has most commonly been recognised to less accurately identify women, especially women of colour. Examples include predictive AI software such as Amazon Rekognition, already broadly used by police in several US states, which shows alarming levels of discrimination based on race and gender. Then there are more famous examples in everyday technologies such as facial recognition software on regular computers such as in the viral video ‘HP computers are racist’.

The main point demonstrated by these examples is that they show how products only reveal discrimination when already in use. In other words, they are inadequately reviewed before release.

Greater expectations and higher accountability

We need to be a little more sceptical when approaching software which is advertised as able to analyse or predict traits, behaviours, and other things human. And not because we should be techno-sceptics, but because the data for the software can import various flawed assumptions which can have extremely serious consequences for people’s privacy and safety.

Brad Smith, president of Microsoft, in his blog post Facial recognition: It’s time for action, mentions the regulation of facial technology as a primary focus for 2019, highlighting a shared responsibility between the tech and government sectors. He argues that key steps should be taken by governments to adopt laws on facial recognition, in order to allow healthy competition between companies and prevent privacy intrusion and discrimination of individuals. In parallel, Microsoft, a leading facial recognition software player, has defined six facial recognition principles which they follow: Fairness, Transparency, Accountability, Non-discrimination, Notice and Consent, and Lawful Surveillance.

While these six principles map out a solid ground for developing more accurate software and following democratic laws, the question remains as to how we know a certain company or state is being transparent and fair when they use and interpret personal biometric data? In an international market, who will hold companies accountable for following or refusing to follow these principles? And under which legislation should the regulation of data privacy of cloud-based services fall? This context becomes exponentially more complex and opaque with speculative privacy-invasive software in countries which are less grounded in democratic values and respect for human rights.

Setting a limit on the application of facial recognition technologies involving personal lives should be thoroughly defined inside an international treaty and set of guidelines. The General Data Protection Regulation in the European Union offers one possible angle to approach such an issue by requiring affirmative consent for collection of biometrics from EU citizens. Most facial recognition algorithms rely on publicly available photos - which are by default accessed and taken without the people portrayed in the photos knowing about it. In other words, the GDPR requires a company on the location of Europe to ask for people's approval to use their personal data, such as portraits when developing a facial recognition software. And, as argued by the Algorithmic Justice League, more algorithmic accountability is needed. They’ve developed a platform for people to report bias and request evaluations of facial recognition software. In this data-driven world of ours, more work needs to be done to better understand and regulate data consent, in other words, our say as users as to who can collect and use our data, when they can collect and use it, and under what circumstances.

Perhaps most important, though, is the need to limit the extent of conclusions based solely on automation and algorithms used. Informed human evaluation, taking into account possible historical and contextual bias, is necessary. AI algorithms need to be analysed to correct existing biases. We want to avoid ending up in a world of perpetuating discrimination, reinforced by the technology we use. And sometimes, maybe it’s not enough to correct existing visible biases; trying to predict human behaviour can often raise more problems than it solves. AI can interpret data, but, as we see in examples from Amazon Rekognition to the AI gaydar study, it cannot understand the subtle strands of context, such as those belonging to sexual identity that can sometimes have serious consequences for personal privacy and safety, especially in the context of already marginalised groups in society.